Remember that WIP #LetsDriveLCD? I am still having some trouble with the MIPI-DSI, and that's part of the VerilogBoy project. Currently I do not have access to any soldering tools, so the plan of making a new revision of prototype need to be postponed. In the meanwhile, I thought it might be a good idea to continue working on the RTL – I started refactoring the code but haven't finished yet. But, I need a hardware platform to test. Well, I forgot to bring my FPGA development board (Xilinx ML505, I really loved that board) with me when I came back from Christmas holiday… But no problem, I got myself two Pano Logic thin clients (G1 and G2) last year. Though I have to admit, I didn't do much with these units after I got them. Now the time has come, let's take a look.

Remember that WIP #LetsDriveLCD? I am still having some trouble with the MIPI-DSI, and that's part of the VerilogBoy project. Currently I do not have access to any soldering tools, so the plan of making a new revision of prototype need to be postponed. In the meanwhile, I thought it might be a good idea to continue working on the RTL – I started refactoring the code but haven't finished yet. But, I need a hardware platform to test. Well, I forgot to bring my FPGA development board (Xilinx ML505, I really loved that board) with me when I came back from Christmas holiday… But no problem, I got myself two Pano Logic thin clients (G1 and G2) last year. Though I have to admit, I didn't do much with these units after I got them. Now the time has come, let's take a look.

We have something to hack

In case you are not familiar with them, let me introduce them first. They were originally thin clients, used to connect to remote desktop servers. What is special about Pano boxes are, they are powered by FPGAs, rather than ARM or x86 CPUs commonly found on a thin client. They advertise it being a “Zero Client”, means there is no (zero) software running on the client. Well, unfortunate for them, they went bankruptcy in 2013. What is fortunate for us is that, these units now become useless for companies originally bought them, being sold for very low price on places like eBay. It is our turn to repurpose these devices! Of course, hackaday has already featured it for several times:

- Ask Hackaday: We might have some FPGAs to hack

- Racing the Beam on a Thin Client, in FPGAs

- Pac-Man Fever Comes to the Pano Logic FPGA

- Two Joysticks Talk To FPGA Arcade Game Over A VGA Cable

As far as I know, there are 3 generations of Pano Logic clients, the first two looks very similar, and the third is slimmer. Unfortunately I have never seen a slim model on the eBay. If you know anything about the slim model, please tell me, I am interested. The first generation (G1) model is powered by a Xilinx Spartan-3E XC3S1600E FPGA (1600K system gates, translate to around 30K LUT4s.), with 32MB of on-board LPDDR RAM. The second generation model, depending on the revision, is either powered by a Xilinx Spartan-6 XC6SLX150 (Rev. B) or Xilinx Spartan-6 XC6SLX100 (Rev. C), both with 128MB of DDR3 memory. The one I own is a Rev. C one. Both generations has already been reversed engineered by the community, notably cyrozap, twj42, and Tom Verbeure. You may find more information about details of the Pano boxes here: https://github.com/tomverbeure/panologic, and https://github.com/tomverbeure/panologic-g2.

Now, which generation should I focus on? Gen 2 is significantly more powerful, however getting harder to find on eBay. The Gen 1, powerful enough for my purpose, and can still be purchased easily on eBay. I decided I want more people being able to play with my VerilogBoy code (if any), so I will go with Gen 1. Also, all the framework I developed for Gen 1 devices might help others looking into playing around with their own G1s.

Talking about the G1...

Then, the gen 1 has already been reversed engineered, someone even published its schematics online, it should be trivial to just port the existing code to the G1, right? No. There are still several issues to be solved:

- The G1 does not have any GPIO for user. In order to attach a game controller, one would need to repurpose some of the IOs (like in the panoman project, he used the I2C from VGA port), or use a USB joystick. Which means I need a host-side USB stack running on a soft core on the FPGA. As I have said, I hope more people can play this, so I will go with the USB solution.

- The G1 doesn’t have any on-board storage large enough to hold the game. The capacity of a typical GameBoy game is between 32KB and 4MB. Famous titles like Pokemon, and Legend of Zelda are around 1MB. The G1 only have a 1MB SPI Flash, and 600KB has been occupied by the bit-stream. Even worse, the G1 doesn’t have a SD or CF card slot, we need to either load the game from ethernet or USB. Well, since I have already need USB for joystick, I will use USB for loading games from.

Now where the games will be loaded to? The only storage element that can hold a 1MB game is the LPDDR memory. At first I thought it is easy to do, I just need to use Xilinx’s MIG to generate a memory controller for me and I just use it as if it is a SRAM, and I have done that before on the ML505. Well, no. The Spartan-3E MIG doesn’t support LPDDR, and the bus width is limited to 16bit (the memory on pano is a 32bit one). And I couldn’t find any open source LPDDR controller core for Spartan-3E online.

Conclusion: I have to make one myself.

So this is what this post all about: making a LPDDR controller for Spartan-3E.

DDR basics

I don't really want to go through all the details about a DDR SDRAM, there are tons of the information online. Here I would just go through some basic stuffs to keep in mind:

- If you ignore the initialization and refresh required by the SDRAM, the protocol is really simple, just like a SRAM, the host sends out the address, and the memory sends back the data.

- Unlike normal SRAMs which use linear addessing, SDRAMs use 2D addressing. The host need to first send out the row address, then the column address. But you may also think it as a linear address, with high bits and low bits transferred at different time.

- Since everything is relatively high speed, delay becomes a thing to consider. Interconnection and logic delay are becoming siginificant relative to the clock period.

- Rise time and fall time, again relative to the clock period, become siginificant. That's why in DDR controller, it is common to manually delay or phase shift the signal, so the clock rising edge is not aligned with the edge of the data, but the center of the data.

- There is a signal called DQS, or data strobe, functioning as a clock for data lines. Usually each byte group of data line would have a corresponding DQS line. This allows the designer to only control the delay within one byte, but not across different bytes.

The Low Power DDR

First, what’s the difference between a LPDDR SDRAM and a DDR SDRAM? Micron has a very nice technical note regarding this: TN4615 – Low Power verses Standard DDR SDRAM. I will just reiterate the main points:

- The DDR uses the SSTL18-I IO standard, while the LPDDR uses the LVCMOS18 IO standard.

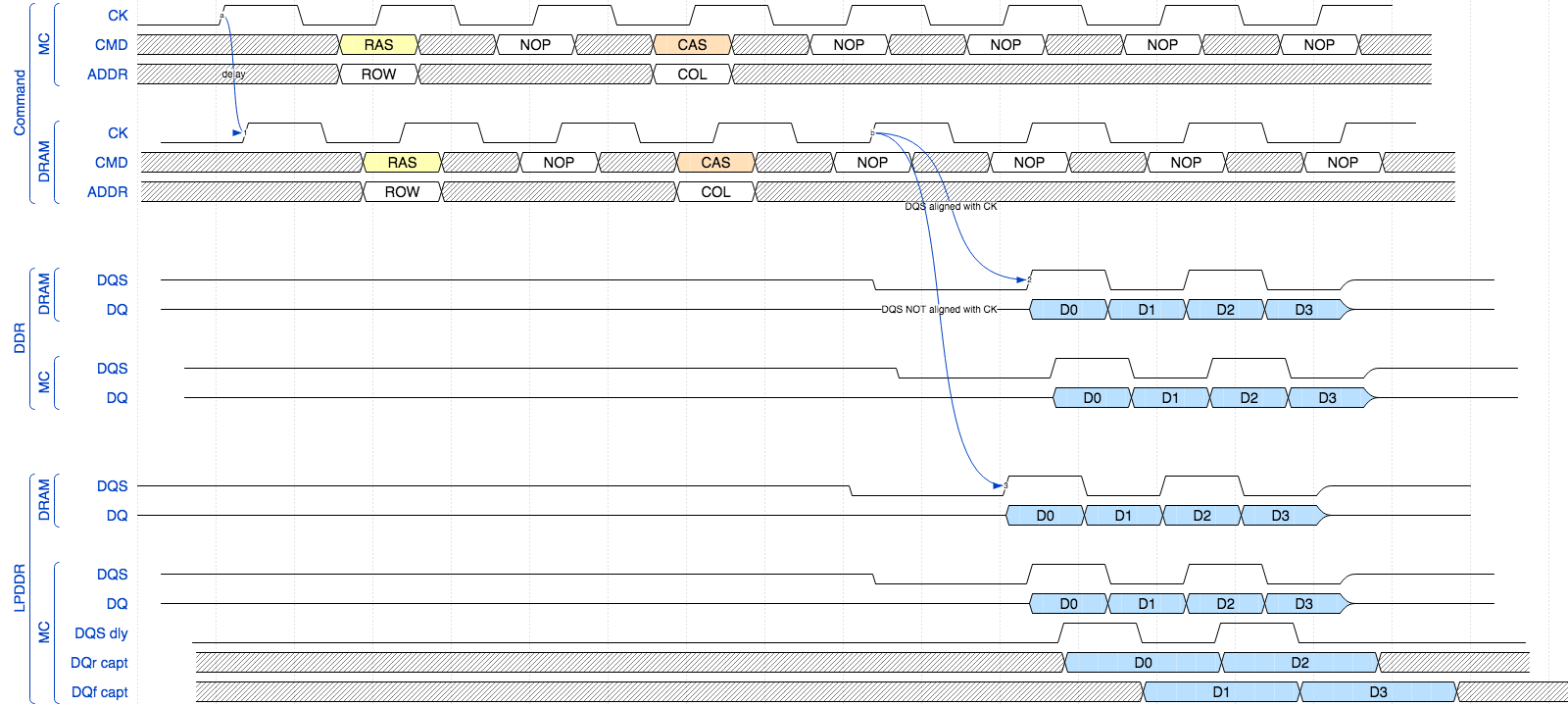

- The DDR has internal DLL (Delay Locked Loop), means there is a minimum frequency limitation, the data bus, data strobe and clock are phase aligned; the LPDDR does not have internal DLL, means there isn’t any limitation about the lowest frequency, but the data bus, data strobe and clock are no longer phase aligned.

- The LPDDR has PASR and TCSR functions to help lower standby current.

- Due to the absence of DLL, the initialization process is different from DDR.

For my purpose, only 1, 2, and 4 are relevant to me.

(Timing diagram showing the difference due to the absense of a DLL)

Where to start?

Writing a DDR controller is all about messing around with delay. One need to careful control the delays between signal to signal to make it work, and input strobe signal need to be delayed for exactly ¼ of a cycle (90 degree phase shift) to be used. On a Spartan-3E, probably the only way to precisely delay a signal is by chaining LUTs together and build an automatic calibration circuit to compensate the temperature change. I am just too lazy to write these myself. So I will start with a working DDR controller and adapt it to work with LPDDR memory.

Then, which one? There are many DDR controllers available online, and few are made for Spartan-3E. I finally decided to use MIG as a starting point. Despite often being criticized for being bloated, the MIG has one important feature: it latches the data based on data strobe (DQS) signal.

As background information, the DDR memory will output the data and data strobe signal at the same time, and the host should latch the data on the both edges of the data strobe (DQS) signal. And normally, one would want to shift the DQS by 90 degrees, so the edge of the DQS, is the middle of valid data window.

Remember I mentioned the phase relationship issue? Many SDR/DDR controllers are being lazy: they will latch the incoming data based on the internal clock instead of data strobe. This works because, if you neglect the delay, DQS should be equivalent to the DRAM CLK, and that CLK is from the controller's internal clock. This will bring two important benefits:

First, the whole datapath is now in one clock domain. If one latch the data using DQS, means the datapath would break into main clock and DQS two clock domains.

Second, one no longer need to delay the DQS signal to get a 90 degrees shift, as it is not used for latching the data anymore. Though one still need a shifted internal clock, but that's trivial to do with FPGA's internal DLL or PLL. This greatly simplify the design of the controller.

The downside is basically losing some timing margin, which isn’t a big deal if it is just DDR rather than DDR4. However it is critical here, on LPDDR, there is no longer phase relationship between DQS and clock, the controller has to use DQS to latch data.

The MIG is the only one I found that utilize the DQS, so that is the only choice.

Modifying the MIG

My experience about modifying the MIG is, well, I should say “unexpected”. The adaption is unexpectedly easy, but things break at unexpected places.

First start with modifying initialization process. Well believe or not, this is ONLY modification required to make MIG works with LPDDR! (But other modifications required to just use the MIG)

In MIG, the initialization is handled by a FSM, and it is inside mig_controller_0.v, around line 980. You may check my code here:

https://github.com/zephray/VerilogBoy/blob/refactor/target/panog1/fpga/mig/mig_controller_0.v

Yes, this is all to make the MIG works with LPDDR. However, one would most likely want to also tweak the MIG’s own behavior a little bit, like changing how address bits are arranged (I like make it {row, bank, column} while the S3E MIG default configuration is {row, column, bank}), or changing the parameters (like setting different burst lengths, or CAS latencies).

RISC-V!

Now, the memory controller is here, I probably should connect it to something to test if it actually works. I can use the provided MIG example, it has data pattern generator and will automatically test the controller, but I don’t quite like that approach: it would either tell me success or fail. I would like to know more.

So I decided to connect it to a PicoRV32 soft processor, and use a piece of memory test code to test if the memory actually works. Of course, a simple MIG to PicoRV32 bridge is required to make it work. It is nothing more than a (probably inefficient) FSM. You might see the source code here:

https://github.com/zephray/VerilogBoy/blob/refactor/target/panog1/fpga/mig_picorv_bridge.v

(I will incorporate a cache into the bridge, so if you are seeing the cache and want to check the version without the cache, find historical version of the file.)

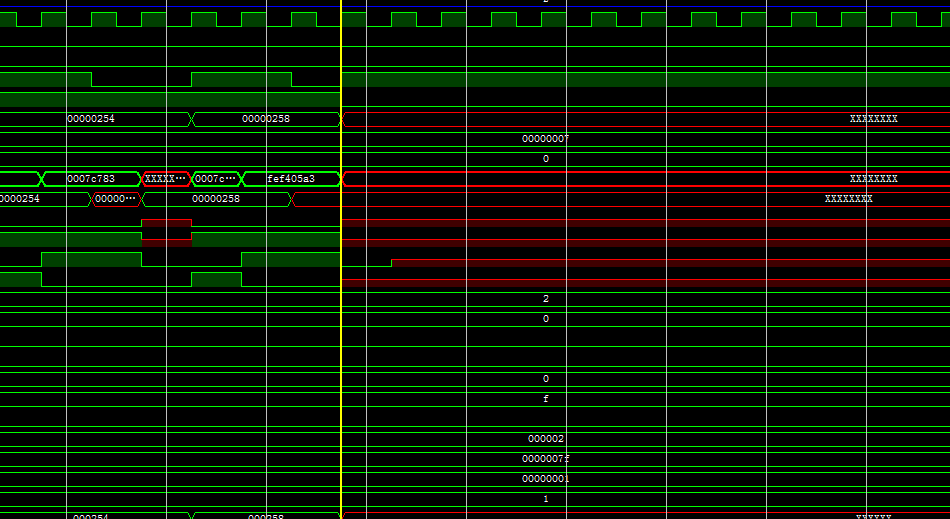

Now, MIG is ready, CPU is in, time to generate the bitstream and test? Well, no. Always do simulation before trying on the board. Micron has provided the LPDDR RAM verilog model, we may just use that and connect it to our board top-level file and run a simulation. Verify the CPU, bridge, and MIG are all working together, and the model doesn’t report any errors.

(The simulation tells you something even a logic analyzer cannot: Undefined values, undefined would be either 0 or 1 on real hardware and it will be hard to tell.)

Simulation also passed, time to generate the bitstream? Not yet. I still need to write the constraint file.

Design Constraint - From behavioral simulation to the actual FPGA board

First is the definition of all the IO pins for the LPDDR. As I have mentioned before, LPDDR uses LVCMOS IO standard rather than SSTL-I, means we are no longer able to use DIFF-SSTL-I IO standard for differential clock pins, and the differential clock has to be driven as if it was two single ended signals. Well, that’s not a big deal here, we just get rid of the MIG’s differential clock buffer, but use two implied single ended OBUFs instead:

assign LPDDR_CK_P = clk_100; assign LPDDR_CK_N = clk_100_180;

And just FYI, I have tried using DIFF-SSTL-I for the clock signal on LPDDR, no success, non of the data read back was correct.

Since the DDR controller is so timing sensitive, more delay related constraints need to be added to the MIG. Luckily, Xilinx has provided them. Start by copying the example ucf file to the project and add it to the project. Remove everything related to the board, as they are described in our own ucf file. Then, rename signals to match our design.

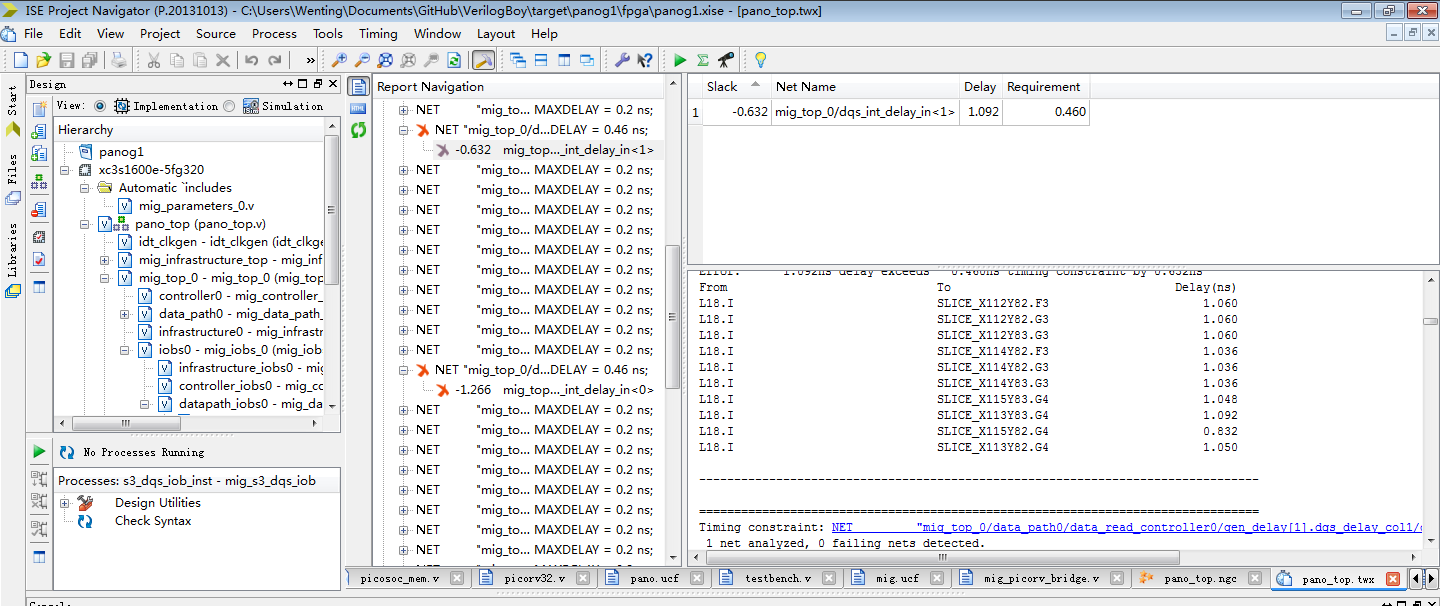

Try to synthesis and implement the design, if the ISE complains about cannot find something, double check using Post-Synthesis explorer. If it is a reference to a primitive, add a “*” after the path. After fixing all the errors, it should finish the implementation, but with tons of failing timing constraints. We can see it is because the IOB is too far away from the CLB:

295/5000

295/5000

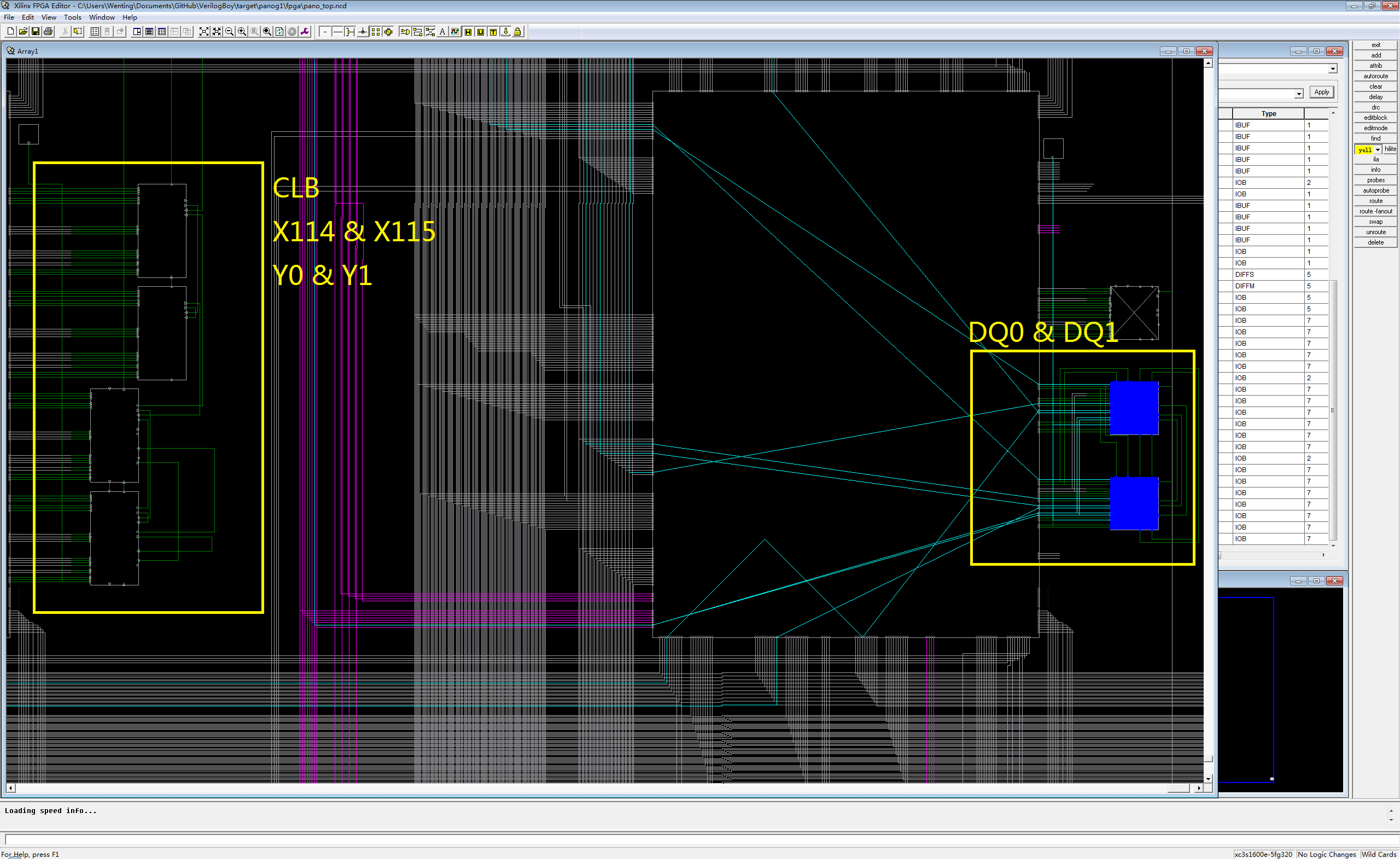

It’s okay, because we are going to fix that. The idea is that, the delay line and FIFO should be as close to the pin’s IOB as possible. One can find out the closest CLB next to a IOB using the FPGA explorer: find the signal on the right, and see what is the coordinate of the CLB next to it, easy.

Here is the CLB Y coordinate right next to each DDR signal, I have found them out so you don’t need to do it again.

- DQ0\1 – Y0\1

- DQ2\3 – Y4\5

- DQ4\5 – Y32\33

- DQ6\7 – Y48\49

- DQS0 – Y8\9

- DQ8\9 – Y52\53

- DQ10\11 – Y56\57

- DQ12\13 – Y60\61

- DQ14\15 – Y72\Y73

- DQS1 – Y64\65

- DQ16\17 – Y84\85

- DQ18\19 – Y88\89

- DQ20\21 – Y96\97

- DQ22\23 – Y104\105

- DQS2 – Y80\81

- DQ24\25 – Y100\101

- DQ26\27 – Y116\117

- DQ28\29 – Y144\145

- DQ30\31 – Y148\149

- DQS3 – Y140\141

Go back to the UCF. See all the locations assignments? Well you probably do not need to touch anything related to calibration, they are independent of the datapath and can be located at anywhere inside the FPGA. Xilinx decided to put them in the middle of the FPGA. What we care about is the location of FIFO bits and DQS delay line. Now the rule for MIG is:

- DQS delay line has to be right next to the IOB.

- FIFO write address and write enable should be right next to the delay line.

- Individual FIFO bits may located near by, but don’t have to be right next to DQS or DQ IOB.

Change the site allocation for related primitives and run the Implementation again, now hopefully timing constraint will all pass.

Testing on the hardware

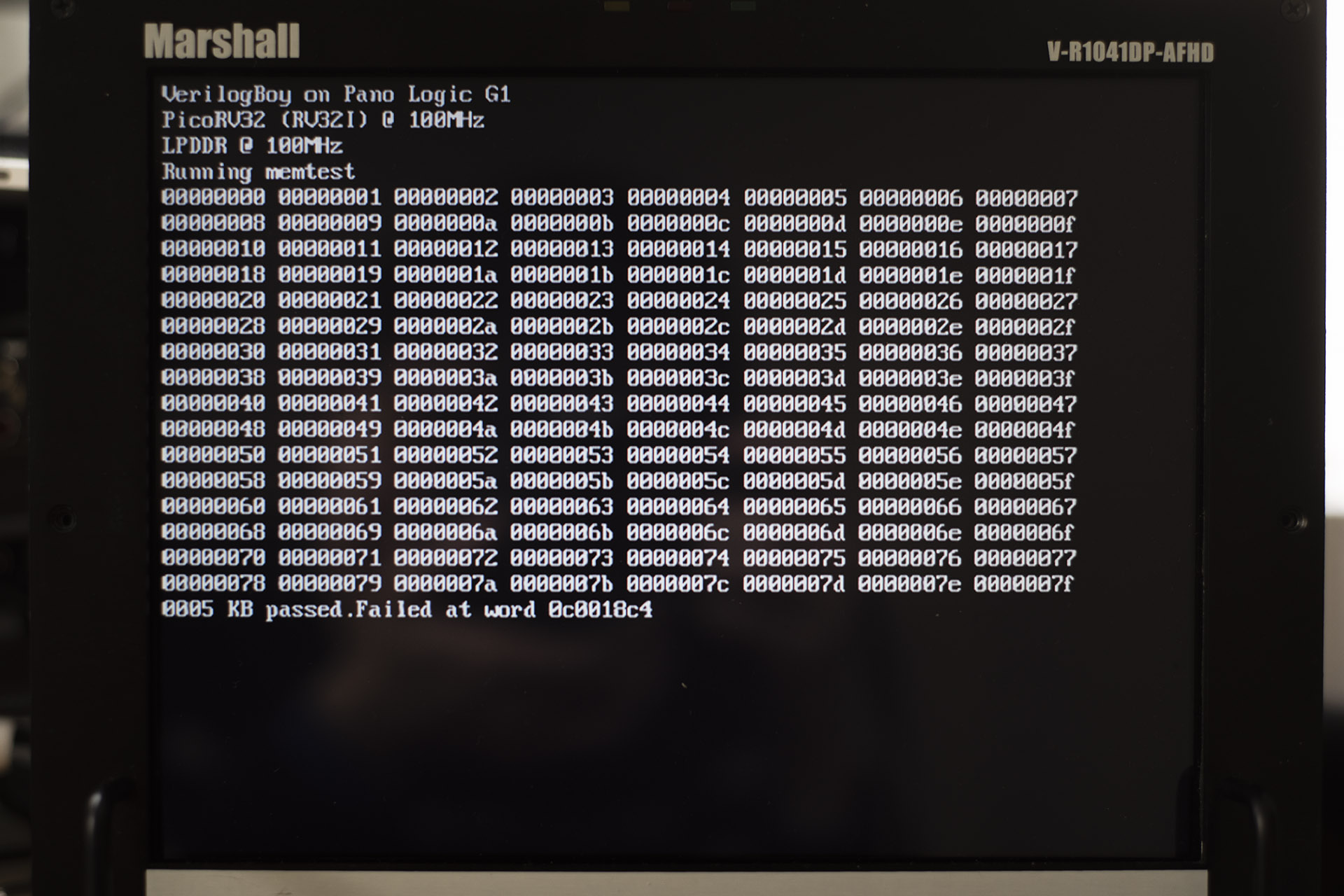

Finally it is time for a test! Well I will be surprised if it works the first time… And the result is:

Interesting. It is mostly working if you say the data is mostly correct, or mostly not working if you say it is basically guarenteed to fail within 1 second.

Well, it took me one day to debug the whole thing, here I am just jumping to the conclusion:

There are at least 4 ways I am aware of that can potentially fix this problem:

- The problem may be caused by incorrect delay line tap setting, means the data might be latched too early or too late. Though the Xilinx has a calibration circuit that should determine the best tap value, it is not working for me. It is reporting higher value than it really should be.

- If you see consistant bit error, try adjust the FIFO bit site allocation, it may or may not help.

- If you see consistant bit error and previous method doesn't help, try to ajust the delay of that specific bit, you can adjust the delay value inside the IBUF for that specific bit. By default they are all set to zero. (If you want to decrease delay of that bit, increase the delay of all other bits, and increase the DQS delay).

- Set SLEW for all LPDDR IOs to FAST.

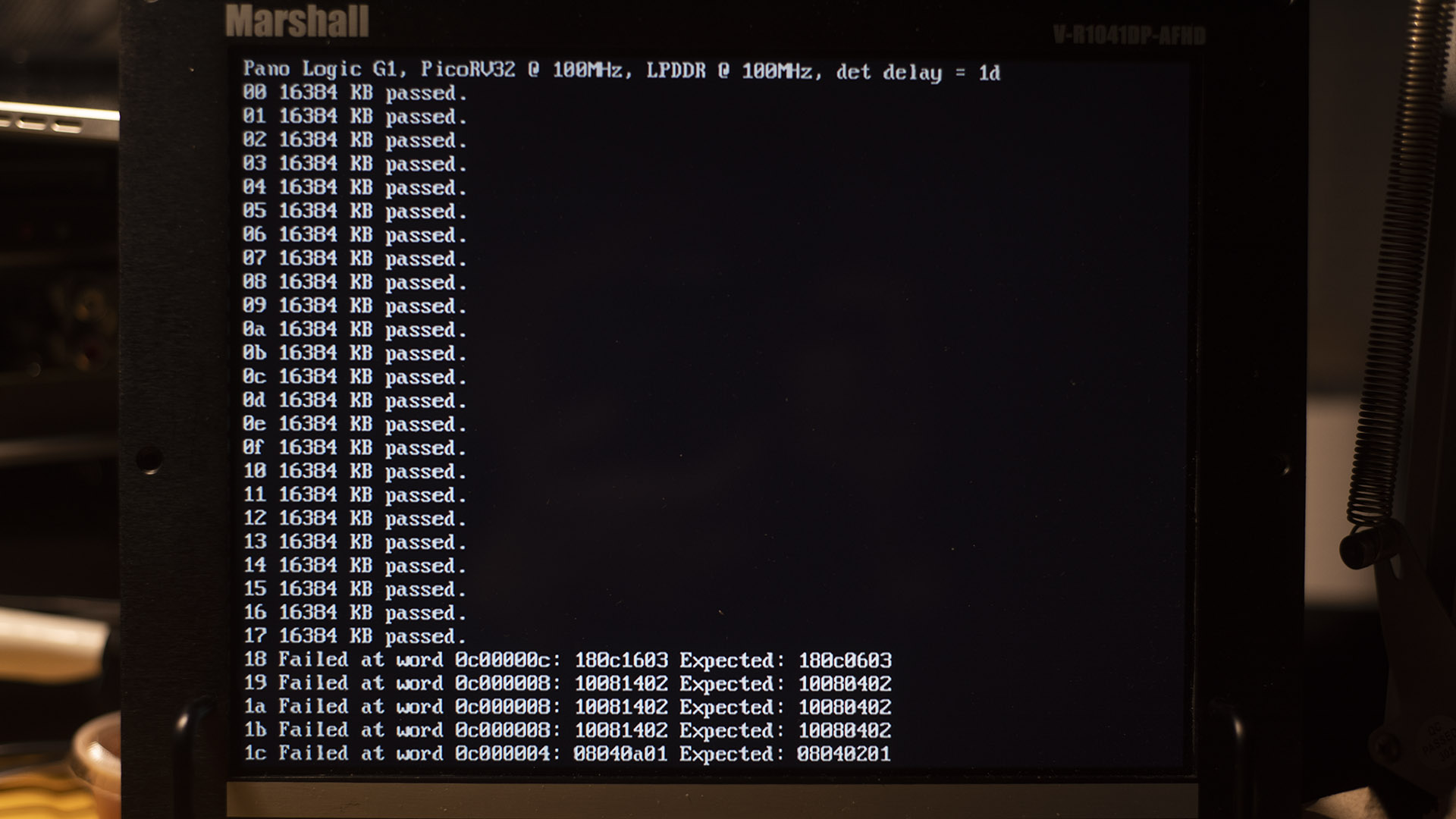

As a result, I used combination of 1, 2, and 4. Don't forget to incorperate a more complex test pattern to test the DRAM. I also write a program to cycle through the different delay tap value to see which works the best:

You can see anything from 0x00-0x17 is working. This is with slew rate set to fast. If it is slow, only delay value 0x01-0x04 are useable.

MIG Goes 32bit

Now the 16bit mode is working, what about 32-bit?

Though only 16bit mode is supported on Spartan3E MIG, the MIG itself is capable of handling 64-bit memories on Spartan-3 and 3A. After all it is the same MIG. Simply edit the mig_parameters_0.v to enable the 32-bit support:

`define DATA_WIDTH 32 `define DATA_STROBE_WIDTH 4 `define DATA_MASK_WIDTH 4

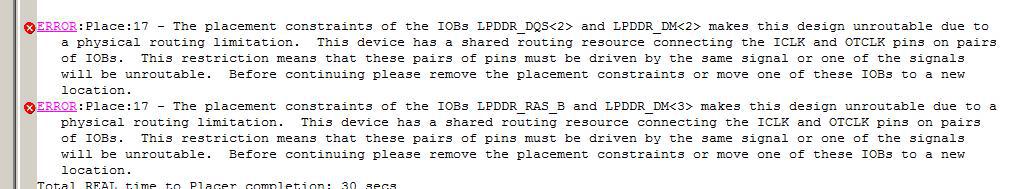

Add constraints of data and DQS lines, modify the PicoRV - MIG bridge to support 32-bit mode, and ready for a test... Then ISE will tell you the real issue:

What is going on?

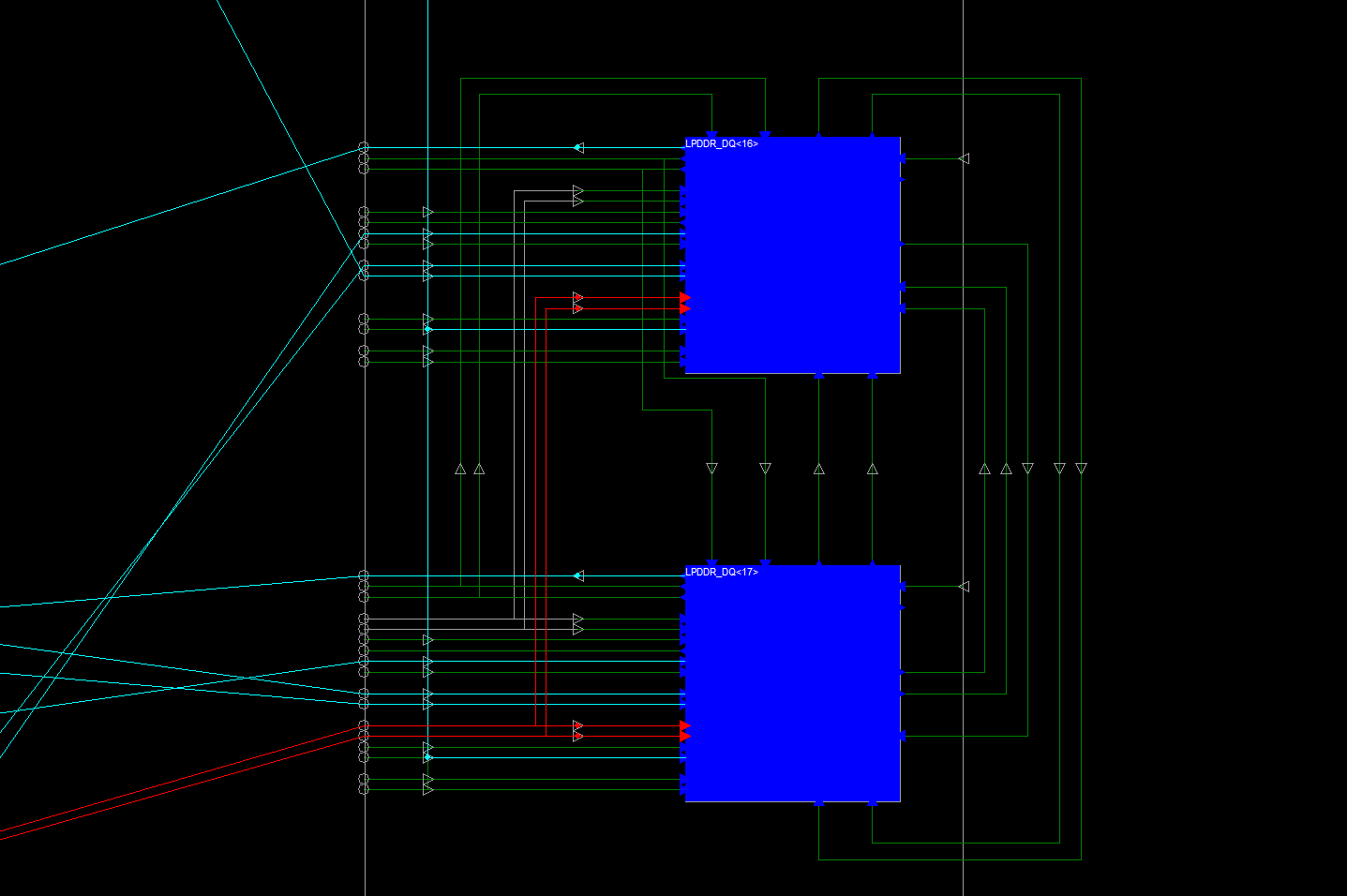

Basically, two IOBs (a pair of IOB) share two same clock lines:

Means, in such a pair, no more than 2 clock lanes should be used to output the data.

Normally, if it is two SDR signals, one IOB can use one clock lane, and there won't be any conflict at all. But if it is a DDR signal, the IOB would need two clocks (original clock and 180 degree shifted clock) to work. So in a pair, IOBs would either use the same clock (like in the screenshot above), or only one signal can be used.

On the Pano Logic, DM0 and DM1 are in the same IOB pair, so they work together nicely. But for whatever reason, this is not the case for DM2 and DM3, they share clocks with other signals, one is DQS, another is RAS. DM and DQ signals uses 90-degree and 270-degree shifted clock for output, while the RAS uses 0-degree clock. In case of such a pair, 3 clocks are required, and it simply won't work.

Workaround: forget about the DM signals, tie them to the ground. DM (data mask) signals are used to mask out the unused data bytes, so they would be ignored by the RAM. It is used when you want to write less bytes than the minimal transfer length (For 32-bit LPDDR memory, it is 8 bytes, but the MIG can only support down to 16 bytes.), so if we always write all the bytes in a transfer, data masks can be ignored.

But the PicoRV32 works with 32-bit (4 bytes) transfers, how to "expand" it to 16 bytes? The answer is cache. I guess that's out of the scope of this log, so I will continue on the topic of cache in the next log.

Before that, we can still use the PicoRV32 to test the 32bit version of controller, by only write and validate 4 bytes every 16 bytes as a workaround. This would test the 32-bit interface, but limit the useable capacity down to 8MB. It is really just for testing.

The result, of course, it worked.

Conclusion / Take aways

- The LPDDR controller is working, under both 16-bit and 32-bit modes, @ 100MHz.

- Looking at the current timing report, I think 100MHz is really already the upper limit.

- A cache is almost a necessity if you want to use the LPDDR under 32-bit mode. To avoid using a cache, stick with 16-bit mode.

- Source code: https://github.com/zephray/VerilogBoy/tree/f546e0789a65e48243175fab955df40a9778f3e6, under target/panog1 folder. This is for the 16-bit mode. Feel free to copy anything you need.